The notebook is defined in terms of 40 Python cells and requires familiarity with the main libraries used: Python scikit-learn for machine learning, Python numpy for scientific computing, Python pandas for managing and analyzing data structures, and matplotlib and seaborn for visualization of the data.

Use Watson Machine Learning to save and deploy the model so that it can be accessed Select the model that’s the best fit for the given data set, and analyze which features have low and significant impact on the outcome of the prediction. Train the model by using various machine learning algorithms for binary classification.Įvaluate the various models for accuracy and precision using a confusion matrix. Split the data into training and test data to be used for model training and model validation. Prepare the data for machine model building (for example, by transforming categorical features into numeric features and by normalizing the data).

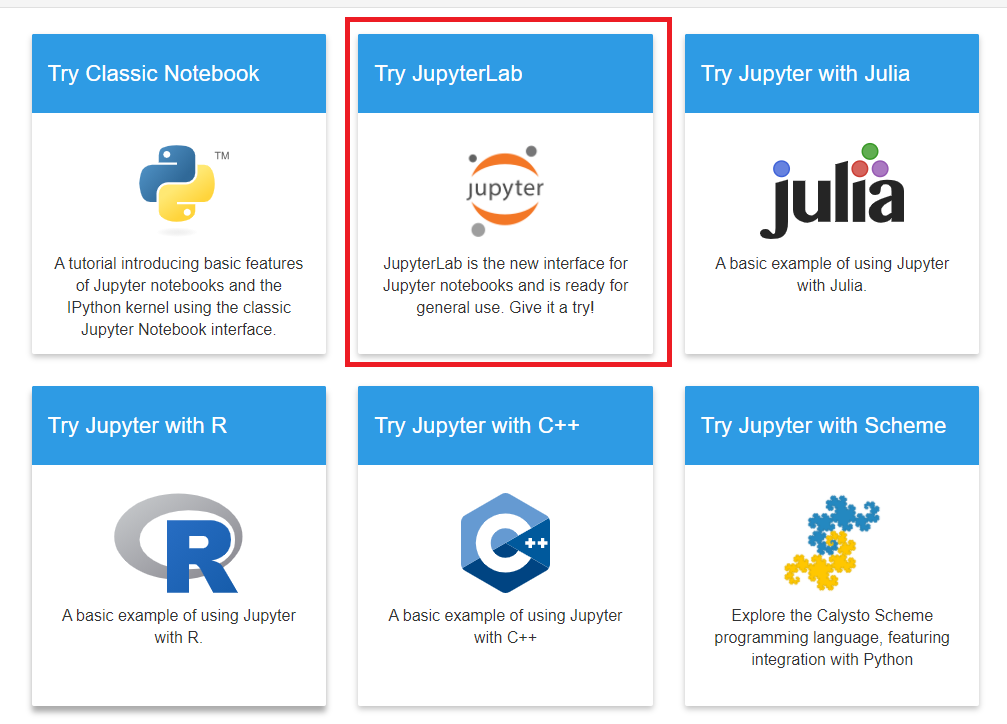

The data set has a corresponding Customer Churn Analysis Jupyter Notebook (originally developed by Sandip Datta, which shows the archetypical steps in developing a machine learning model by going through the following essential steps:Īnalyze the data by creating visualizations and inspecting basic statistic parameters (for example, mean or standard variation). We start with a data set for customer churn that is available on Kaggle. This tutorial explains how to set up and run Jupyter Notebooks from within IBM® Watson™ Studio.

0 kommentar(er)

0 kommentar(er)